Author: joelphilippage

-

Vampire Therapist Review

A groundbreaking gameplay style that slams traditional education for Cognitive Behavioral Therapy right into the exciting realm of entertainment!

-

Querent

Querent is a truly unique turn based tactical role playing game. Build a unique deck of skills for each character your recruit. Make your way across an alternate Southwest America to rid the town of Sunset Junction and it’s inhabitants from a dark force that has possessed the animals of the land.

-

Animating with Rive

In this tutorial, I’m going to show you how I make animations for my game with Rive. You can see here a previous example I did with my miner character. Rive is a powerful tool for animation that can plug into almost any piece of code. It has a state machine interface that seamlessly blends…

-

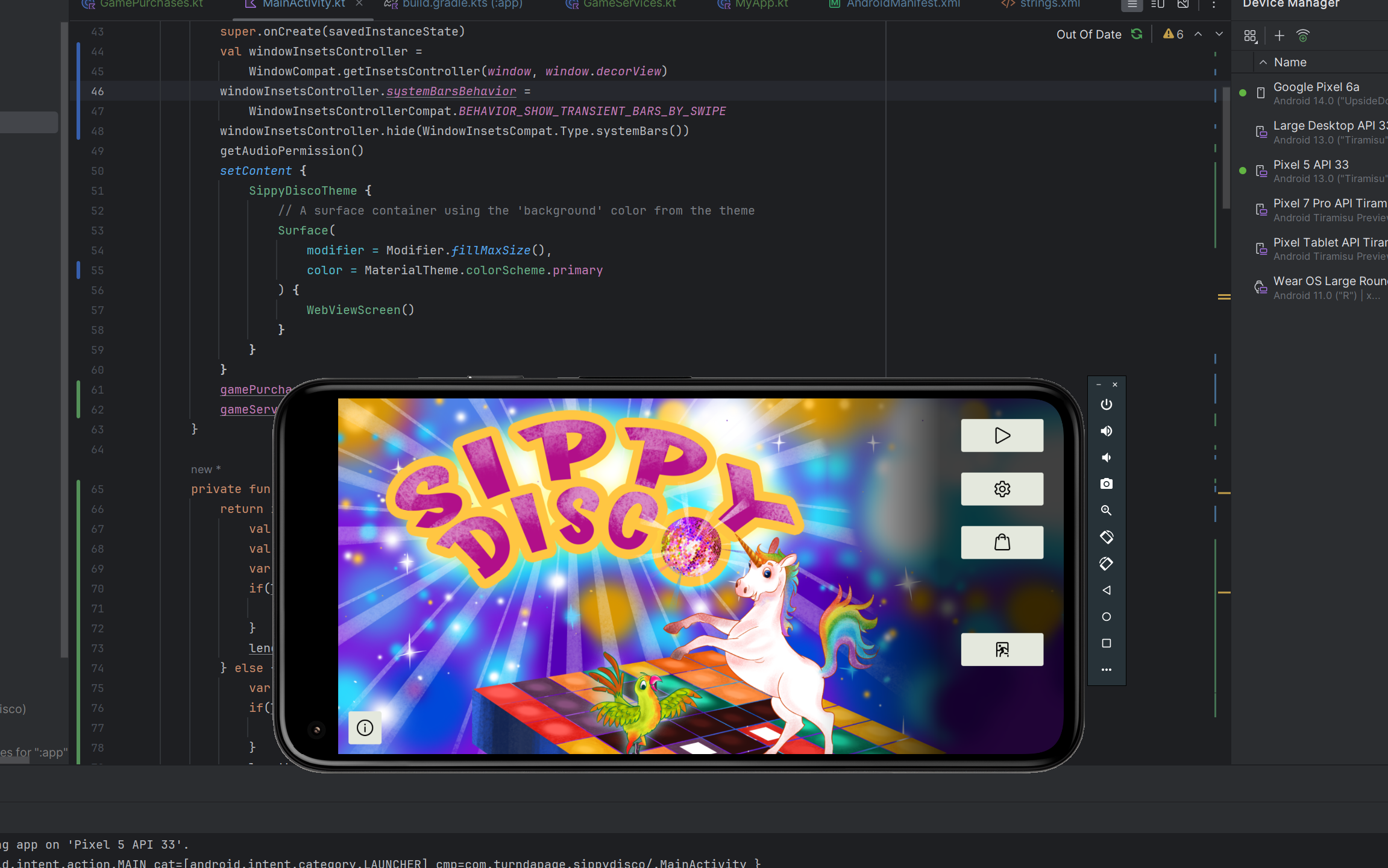

Porting Web Game to Android

This post will quickly show you how to run any standalone web based game on Android I recently released my game Sippy Disco on Steam and wanted to do a quick port to Android. I had originally intended to develop a wrapper with Avalonia for all platforms, it was working well on Windows and Mac…

-

Wolf in Sleep’s Clothing Card Game

Count your sheep before you fall asleep! This quick card game is great for the whole family. Be the first to stack your sheep by a corresponding number, but watch out for the wolves! Contains sixty circular cards with an instruction booklet in a portable tin.

-

Southwestern Fantasy Playing Cards

These one of a kind playing cards are the perfect addition to your life! Who doesn’t like playing cards and sipping on some good coffee? Gather your friends together and show off these truly unique cards while having some fun!

-

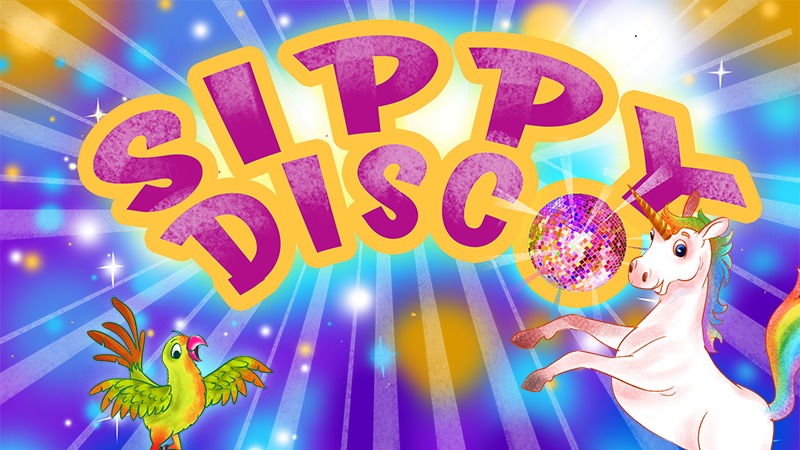

Sippy Disco

Light up the dance floor in this cozy puzzle game! Sippy the unicorn must step on every square in the fewest possible moves to make the dance floor light up. Partner with Parrots on skates and disco balls to brighten everyone’s day! View Privacy Policy

-

Reuse your code in creative ways

I’m currently developing a tile based tactical game in JavaScript for the web. It’s definitely my dream project, but I know at my current rate it’s going to be a few years before I can finish it. While I would like to just keep churning away on it, it’s been years since I shipped a…

-

Death of a Masochist – 2023

A game made for the Adventure Game Jam.

-

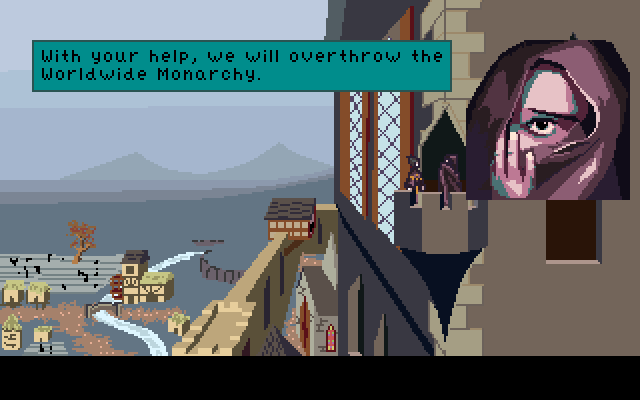

Next to Evil – 2010

Duet is a woman with a strange talent. She is also a member of the most powerful family in the world. When she sees the destruction her family causes to the empire and a strange veiled woman gives her the opportunity to betray them, she must decide if starting a revolution is really what she…